- Fusion-io Scsi & Raid Devices Driver Download For Windows 10 64-bit

- Fusion-io SCSI & RAID Devices Driver Download For Windows 10

- Fusion-io Scsi & Raid Devices Driver Download For Windows 10 Xp

For any of you that have picked up old Fusion-io devices on ebay to use as fast VMFS datastores, it appears that these are not going to be supported in ESXi 7.0 and beyond. I'm honestly surprised the driver I have been using has worked as long as it has (scsi-iomemory-vsl-60L-3.2.16.1731-offlinebundle-9738096.zip), but it now appears that. Fusion-io announced its acquisition of ID7, a UK-based storage technology company founded in 2006. ID7 is known for software defined storage (SDS), open-source work in shared storage, and as the developers of SCSI target subsystem (SCST) storage subsystem for Linux.

This is the complete repost of Chris Mellior’s terrific article from last week:

Gotta be PCIe and not SAS or SATA

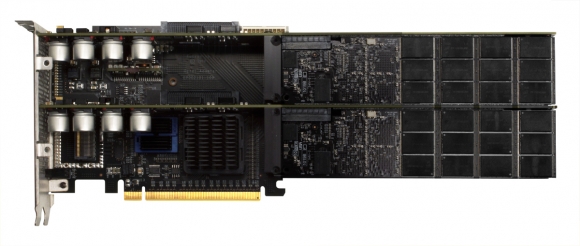

Fusion-io 2.5-inch, SCSI Express-supporting SSDs plugged into the top two ports in the card pictured above. Poulton says these ports are SFF 8639 ones. The other six ports appear to be SAS ports. A podcast on HP social media guy Calvin Zito’s blog has two HP staffers at Vienna talking about SCSI Express. SCSI Express productisation. The SCSI Port driver streamlines the Windows storage subsystem by emulating a simplified SCSI adapter. Storage class drivers load on top of the port driver. This means that you can write storage class drivers for Windows with minimal concern for the unique hardware features of each SCSI adapter. Fusion-io ioMemory PX600-1000 - solid state drive - 1 TB - PCI Express 2.0. $10,592.99 Advertised Price. It provides Small Computer System Interface (SCSI).

By Chris Mellor • Get more from this author

Posted in Storage, 7th December 2011 15:43 GMT

A flash device that can put out 100,000 IOPS shouldn’t be crippled by a disk interface geared to dealing with the 200 or so IOPS delivered by individual slow hard disk drives.

Disk drives suffer from the wait before the read head is positioned over the target track; 11msecs for a random read and 13msecs for a random write on Seagate’s 750GB Momentus. Solid state drives (SSDS) do not suffer from the lag, and PCIe flash cards from vendors such as Fusion-io have showed how fast NAND storage can be when directly connected to servers, meaning 350,000 and more IOPS from its ioDrive 2 products.

Generation 3 PCIe delivers 1GB/sec per lane, with a 4-lane (x4) gen 3 PCIe interface shipping 4GB/sec.

You cannot hook an SSD directly to such a PCIe bus with any standard interface.

You can hook up virtually any disk drive to an external USB interface or an internal SAS otr ATA one and the host computer’s O/S will have standard drivers that can deal with it. Ditto for an SSD using these interfaces, but the SSD is sluggardly. To operate at full speed and so deliver data fast and help keep a multi-core CPU busy, it needs an interface to a server’s PCIe bus that is direct and not mediated through a disk drive gateway.

What could go wrong with this rosy outlook? Plenty; this is IT. There is, of course, a competing standards initiative called SCSI Express.

Fusion-io Scsi & Raid Devices Driver Download For Windows 10 64-bit

If you could hook an SSD directly to the PCIe bus you could dispense with an intervening HBA that requires power, and slows down the SSD through a few microseconds added latency and a hard disk drive-connectivity based design.

There are two efforts to produce standards for this interface: the NVMe and the SCSI Express initiatives.

NVMe

NVMe, standing for Non-Volatile Memory express, is a standard-based initiative by some 80 companies to develop a common interface. An NVMHCI (Non-Volatile Memory Host Controller Interface) work group is directed by a multi-member Promoter Group of companies – formed in June 2011 – which includes Cisco, Dell, EMC, IDT, Intel, NetApp, and Oracle. Permanent seats in this group are held by these seven vendors, with six other seats held by elected representatives from amongst the other work group member companies.

It appears that HP is not an NVMe member, and most if not all NVMe supporters are not SCSI Express supporters.

The work group released a v1.0 specification in March this years, and details can be obtained at the NVM Express website.

A white paper on that site says:

The standard includes the register programming interface, command set, and feature set definition. This enables standard drivers to be written for each OS and enables interoperability between implementations that shortens OEM qualification cycles. …The interface provides an optimised command issue and completion path. It includes support for parallel operation by supporting up to 64K command queues within an I/O Queue. Additionally, support has been added for many Enterprise capabilities like end-to-end data protection (compatible with T10 DIF and DIX standards), enhanced error reporting, and virtualisation.

The standard has recommendations for client and enterprise systems, which is useful as it means it will embrace the spectrum from notebook to enterprise server. The specification can support up to 64,000 I/O queues with up to 64,000 commands per queue. It’s multi-core CPU in scope and each processor core can implement its own queue. There will also be a means of supporting legacy interfaces, meaning SAS and SATA, somehow.

A blog on the NVMe website discusses how the ideal is to have a SSD with a flash controller chip, a system-on-chip (SoC) that includes the NVMe functionality.

What looks likely to happen is that, with comparatively broad support across the industry, SoC suppliers will deliver NVMe SoCS, O/S suppliers will deliver drivers for NVMe-compliant SSDs devices, and then server, desktop and notebook suppliers will deliver systems with NVMe-connected flash storage, possibly in 2013.

What could go wrong with this rosy outlook?

Plenty; this is IT. There is, of course, a competing standards initiative called SCSI Express.

SCSI Express

SCSI Express uses the SCSI protocol to have SCSI targets and initiators talk to each other across a PCIe connection; very roughly it’s NVMe with added SCSI. HP is a visible supporter of it, with there being SCSI Express booth at its HP Discover event in Vienna, and support at the event from Fusion-io.

Fusion said its “preview demonstration showcases ioMemory connected with a 2U HP ProLiant DL380 G7 server via SCSI Express … [It] uses the same ioMemory and VSL technology as the recently announced Fusion ioDrive2 products, demonstrating the possibility of extending Fusion’s Virtual Storage Layer (VSL) software capabilities to a new form factor to enable accelerated application performance and enterprise-class reliability.”

The SCSI Express standard “includes a SCSI Command set optimised for solid-state technologies … [and] delivers enterprise attributes and reliability with a Universal Drive Connector that offers utmost flexibility and device interoperability, including SAS, SATA and SCSI Express. The Universal Drive Connector also preserves legacy investments and enables support for emerging storage memory devices.”

An SNIA document states:

Currently ongoing in the T10 (www.t10.org) committee is the development of SCSI over PCIe (SOP), an effort to standardise the SCSI protocol across a PCIe physical interface. SOP will support two queuing interfaces – NVMe and PQI (PCIe Queuing Interface).

PQI is said to be fast and lightweight. There are proprietary SCSI-over-PCIe products available from PMC, LSI, Marvell and HP but SCSI Express is said to be, like PQI, open.

The support of the NVMe queuing interface suggests that SCSI EXpress and NVMe might be able to come together, which would be a good thing and prevent the industry working on separate SSD PCIe-interfacing SoCs and operating system drivers.

Of course this imagining could be just us blowing smoke up our own ass.

There is no SCSI Express website but HP Discover in Vienna last month revealed a fair amount about SCSI express, which is described in a Nigel Poulton blog.

He says that a 2.5-inch SSD will slot into a 2.5-inch bay on the front of a server, for example, and that “[t]he [solid state] drive will mate with a specially designed, but industry standard, interface that will talk a specially designed, but again industry standard, protocol (the protocol enhances the SCSI command set for SSD) with standard drivers that will ship with future versions of major Operating Systems like Windows, Linux and ESXi”.

Fusion-io SCSI & RAID Devices Driver Download For Windows 10

Fusion-io 2.5-inch, SCSI Express-supporting SSDs plugged into the top two ports in the card pictured above. Poulton says these ports are SFF 8639 ones. The other six ports appear to be SAS ports.

A podcast on HP social media guy Calvin Zito’s blog has two HP staffers at Vienna talking about SCSI Express.

SCSI Express productisation

Fusion-io Scsi & Raid Devices Driver Download For Windows 10 Xp

SCSI Express productisation, according to HP, should occur around the end of 2012. We are encouraged (listen to podcast above) to think of HP servers with flash DAS formed from SCSI Express-connected SSDs, but also storage arrays, such as HP’s P4000, being built from ProLiant servers with SCSI Express-connected SSDs inside them.

This seems odd as the P4000 is an iSCSI shared SAN array, and why would you want to get data at PCIe speeds from the SSDs inside to its X86 controller/server, and then ship them across a slow iSCSI link to other servers running the apps that need the data?

It only makes sense to me if the P4000 is running the apps needing the data as well, if the P4000 and app-running servers are collapsed or converged into a single (servers + P4000) system. Imagine HP’s P10000 (3PAR) and X9000 (Ibrix) arrays doing the same thing: its Converged Infrastructure ideas seem quite exciting in terms of getting apps to run faster. Of course this imagining could be just us blowing smoke up our own ass.

El Reg’s takeaway from all this is that NVMe is almost a certainty because of the weight and breadth of its backing across the industry. We think it highly likely that HP will productise SCSI Express, with support from Fusion-io and that, unless there is a SCSI Express/NVMe convergence effort, we’re quite likely to face a brief period of interface wars before one or the other becomes dominant.

Concerning SCSI Express and NVMe differences, EMC engineer Amnon Izhar said: “On the physical layer both will be the same. NVMe and [SCSI Express] will be different transport/driver implementations,” implying that convergence could well happen, given sufficient will.

Our gut feeling is that PCIe interface convergence is unlikely, as HP is quite capable of going its own way; witness the FATA disks of recent years and also its individual and admirably obdurate flag-waving over Itanium. ®